Research Questions

Our research focuses on two interrelated questions:

(1) How do people perceive and recognize real-world visual scenes?

(2) How do people build up representations of their spatial environment in order to navigate from place to place?

Scene Perception

Previous work from our laboratory has identified specific regions of the brain that respond preferentially to real-world visual scenes, including the Parahippocampal Place Area (PPA), Retrosplenial Complex (RSC), and Occipital Place Area (OPA). We are currently focusing on understanding how these regions represent crucial aspects of these scenes, such as their geometric boundaries, spatial affordances, and identity as a navigational landmark.

Spatial Navigation

Like other animals, humans rely on representations of the large-scale spatial structure of the world in order to navigate successfully from place to place. We are exploring the neural systems that support representations of large-scale, navigable spaces, such as a city or a college campus. We are especially interested in understanding how people use visual information to orient in space, and form cognitive maps of the world. An important goal here is to understand the fundamental neural and cognitive mechanisms that underlie navigation in both physical and abstract spaces.

A recent review of the scene perception literature is here. Recent reviews of the spatial navigation literature are here and here.

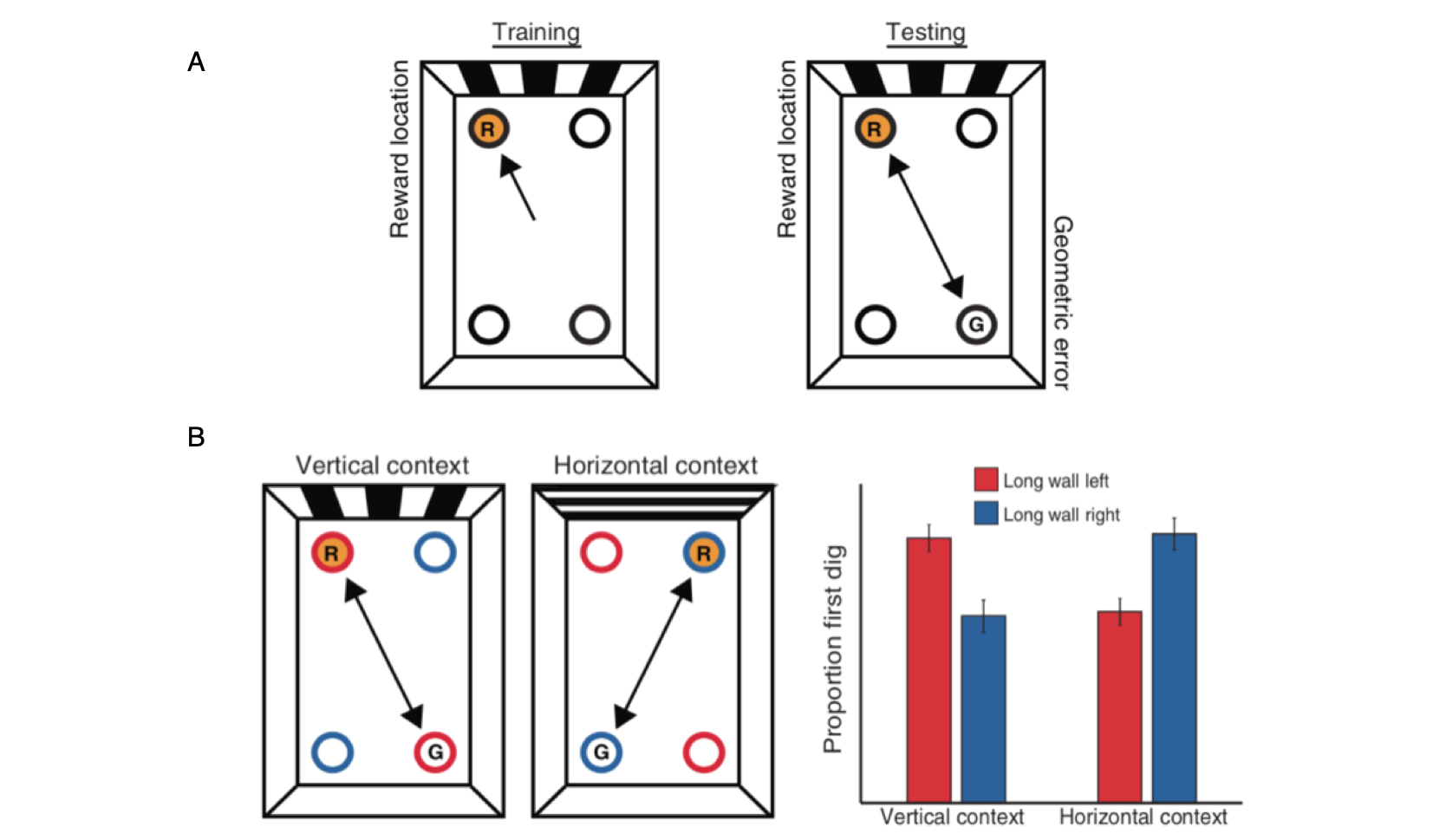

PPA and RSC respond preferentially to scenes.

The Parahippocampal Place Area (PPA) and Retrosplenial Complex (RSC) are shown on a reference brain with voxels showing greater response to scenes than to objects indicated in orange. Bar charts show the fMRI response in the PPA and RSC to six stimulus categories, plotted as percent signal change relative to a fixation (no-stimulation) baseline.

Data: Epstein, R.A. (2008). Trends in Cognitive Sciences, 12: 388-396.

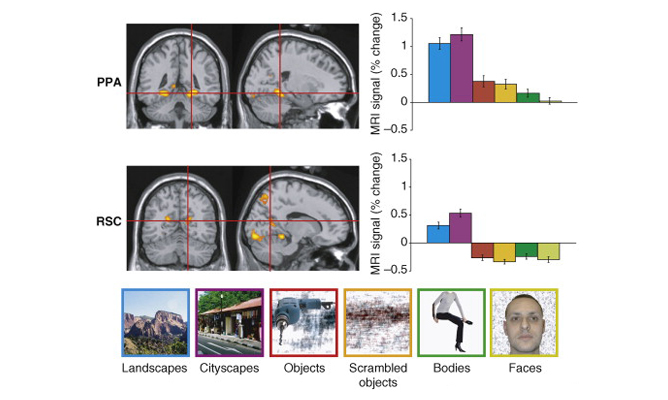

OPA is causally involved in processing of environmental boundaries.

Subjects were taught the locations of 4 target objects in a circular arena. 2 objects (blue) remained fixed relative to the arena’s boundary, while 2 moved in tandem with a reference object (red). Spatial memory for the boundary-referenced objects was disrupted by transcranial magnetic stimulation of the occipital place area (OPA), while spatial memory for object-referenced objects was not disrupted.

Data: Julian, J. B., Ryan, J., Hamilton, R. H., & Epstein, R.A. (2016). Current Biology, 26(8): 1104-1109.

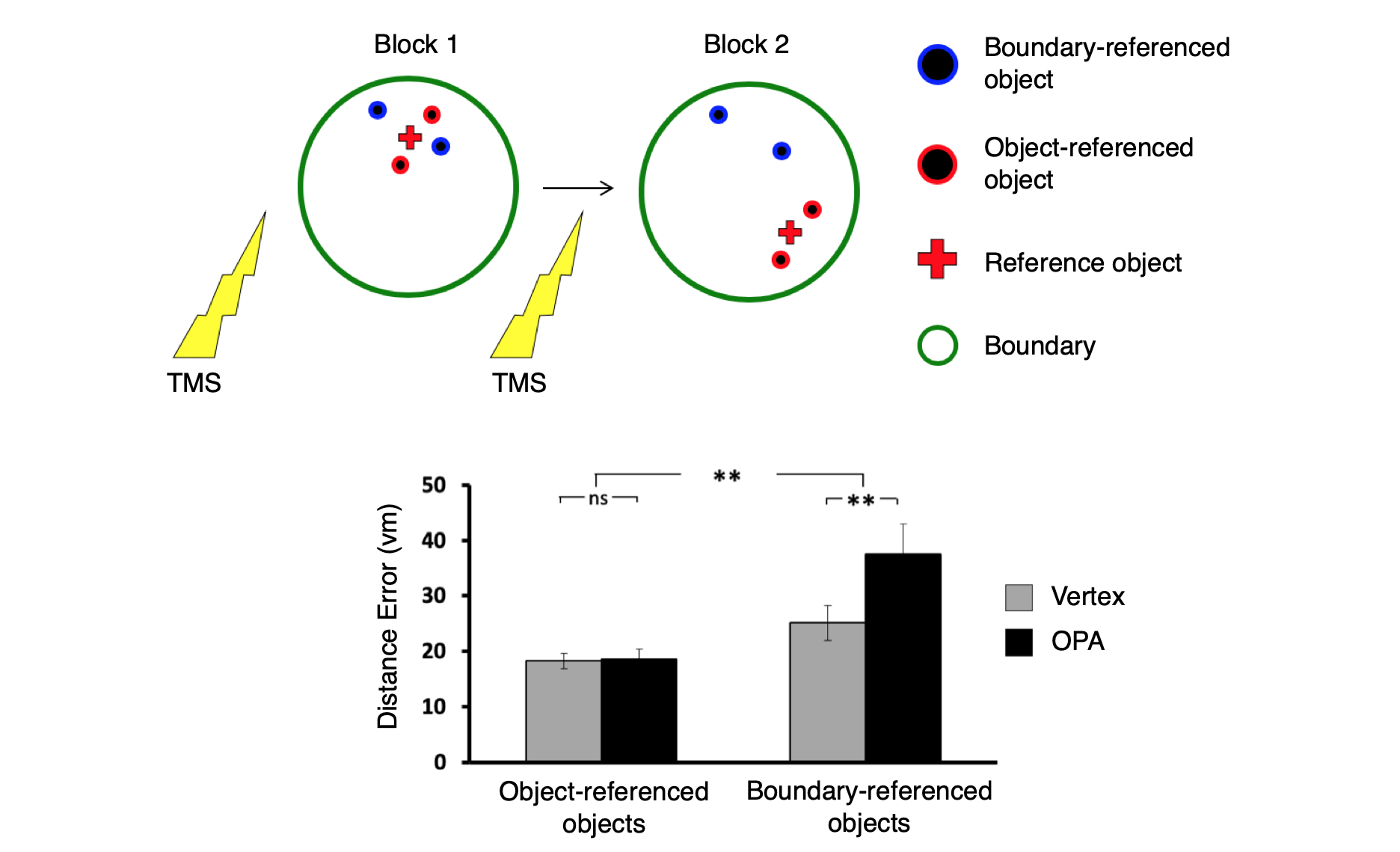

OPA encodes navigational affordances of scenes.

(A) Navigational affordances of scenes were quantified by asking raters to draw movement paths through the scenes. Scenes with more similar paths elicted more similar multivoxel activation patterns in OPA and PPA.

(B) Representational dissimilarlity matrix showing similarities of navigational affordances between 50 scenes.

(C) A whole brain searchlight analysis shows navigational affordance coding in OPA (green outline) for artificial scenes (top) and natural scenes (bottom).

Data: Bonner, M. F. & Epstein, R. A. (2017). Proceedings of the National Academy of Sciences, 114(18): 4793-4798.

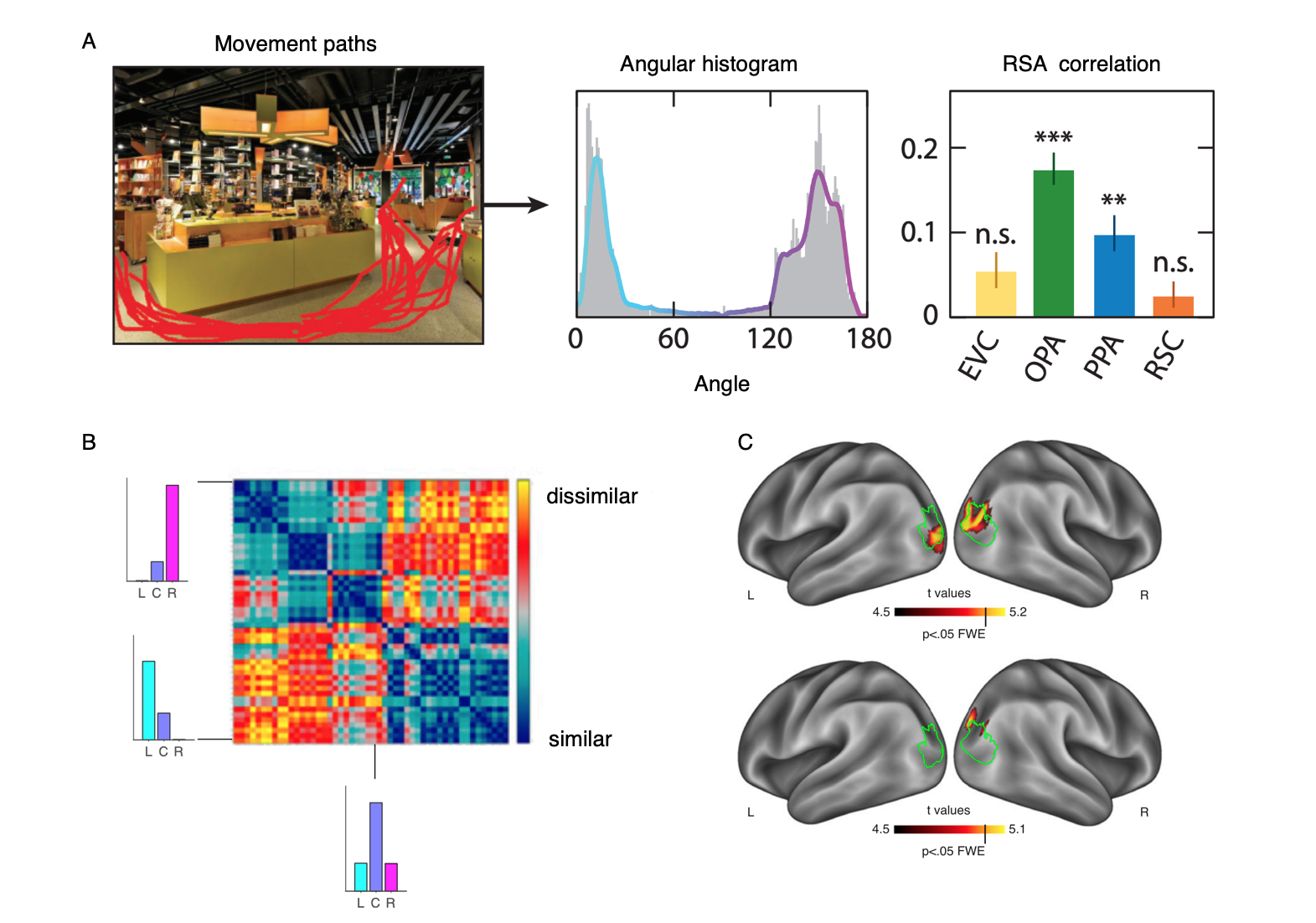

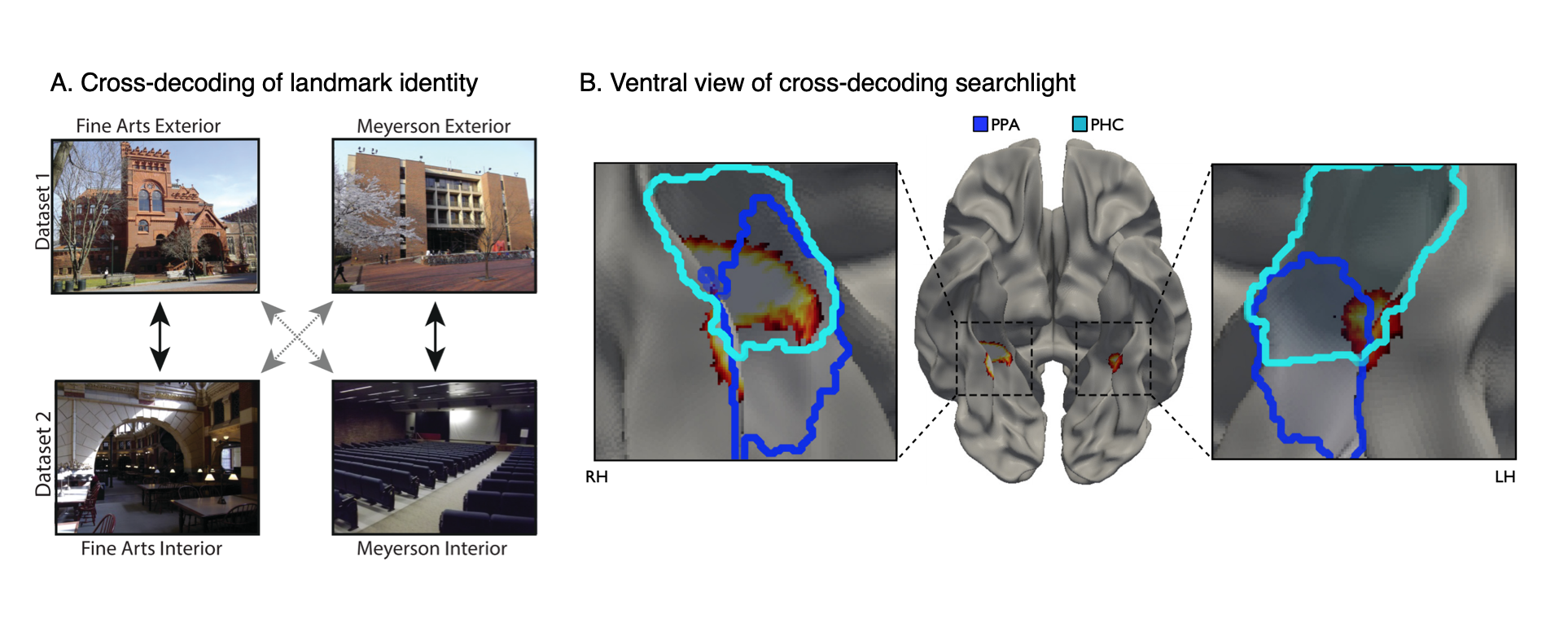

Landmark identity information in the parahippocampal place area (PPA).

(A) Subjects were shown images of the interiors and exteriors of campus landmarks in the scanner. We looked for multivoxel patterns that distinguished between the landmarks and generalized across interior and exterior views.

(B) A view-independent landmark representation was found in anterior PPA. (Dark Blue=PPA; Light Blue=Parahippocampal cortex).

Data: Marchette, S. A., Vass, L. K., Ryan, J. & Epstein, R.A. (2015). The Journal of Neuroscience, 35(44): 14896-14908.

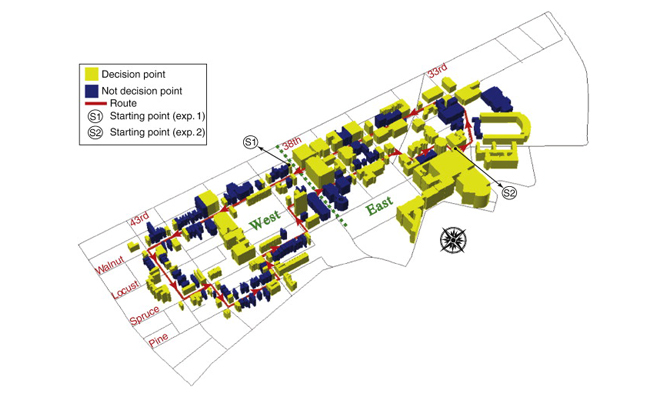

Study area for real-world route learning experiment.

Learning a route through a large-scale environment involves learning landmarks located at critical points along the route. To investigate the neural mechanisms supporting this ability, participants were lead along a 3.8 km route around the Penn campus and surrounding neighborhood before being scanned with fMRI. A total of 180 buildings were located directly along the route, with 85 at navigational decision points (yellow) and 95 at non-decision point locations (blue).

Data: Schinazi, V.R. & Epstein, R.A. (2010). NeuroImage, 53(2): 725-735.

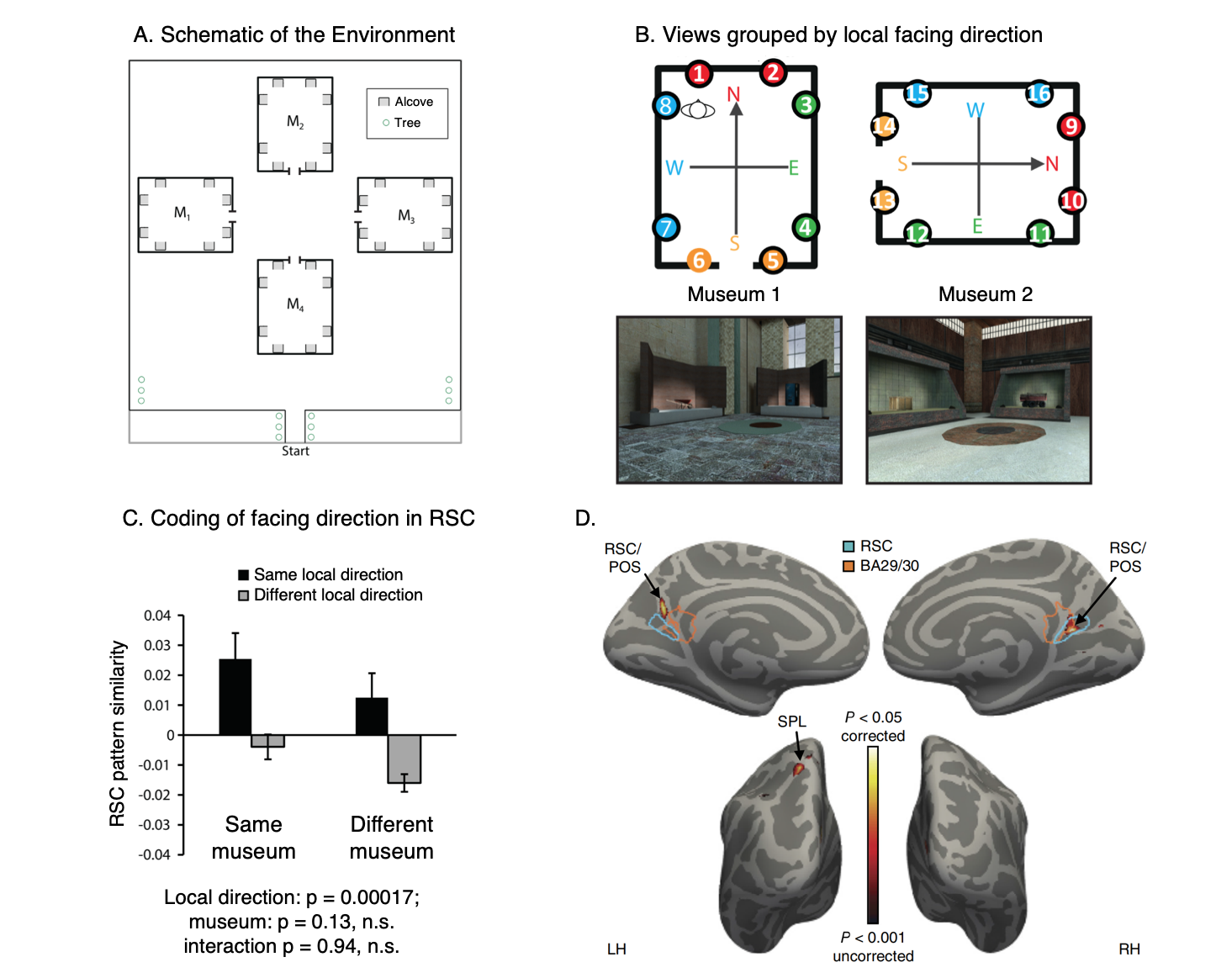

RSC represents space in locally-anchored coordinates.

(A) Subjects learned a virtual environment that consisted of a courtyard with four “museums”, each with 8 “exhibits” (i.e., objects) in alcoves along the walls. They were then scanned while performing a judgment of relative direction (JRD) task. On each trial, they were asked to imagine themselves standing in front of one of the objects while recalling the location (left or right) of a second object.

(B) We tested for a local heading code, defined by the internal geometry of the museum. In a region exhibiting such a code, views facing the same local direction (indicated by colors) should have similar multivoxel patterns, even if they are in different museums.

(C) We observed such a pattern in RSC.

(D) A whole-brain searchlight analysis revealed a local heading code in RSC and also the superior parietal lobe (SPL).

Data: Marchette, S. A., Vass, L. K., Ryan, J. & Epstein, R.A. (2014). Nature Neuroscience, 17(11): 1598-1606.

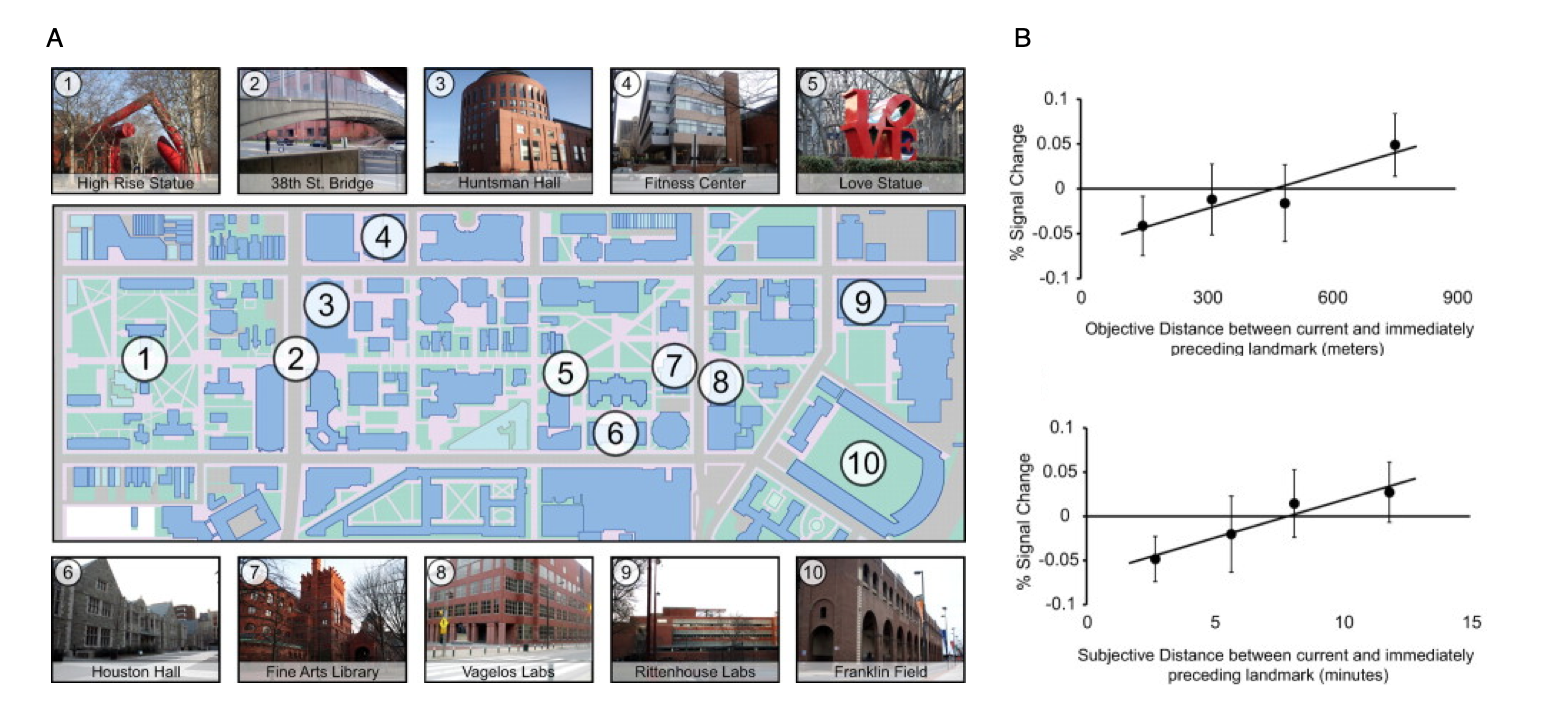

Cognitive maps in the human hippocampus.

(A) A key aspect of a cognitive map is that it preserves information about the distances between locations. To identify brain regions that mediate such a distance-preserving code, Penn students were scanned with fMRI while viewing images of 10 familiar landmarks on campus. 22 distinct photographs were taken of each landmark.

(B) fMRI response in the anatomically defined left anterior hippocampus scaled with the real-world distance (top panel) and subjective distance (bottom panel) between landmarks shown on successive trials, indicating that this region supports a map-like representation of the campus.

Data: Morgan, L.K., MacEvoy, S.P., Aguirre, G.K. & Epstein, R.A. (2011). Journal of Neuroscience, 31(4): 1238-1245.

Grid-like representations of visual space in entorhinal cortex (ERC).

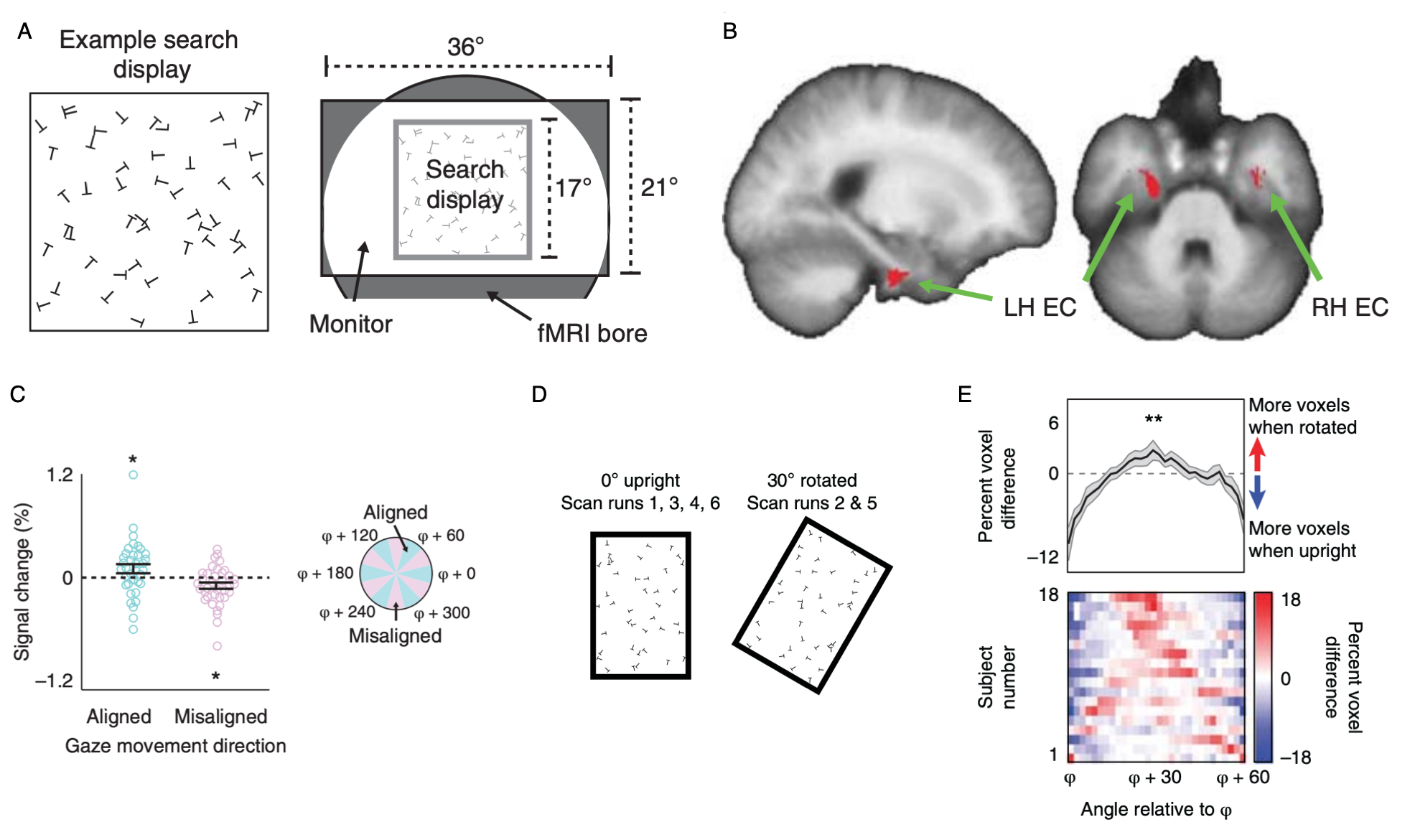

(A) With the motivation of identifying grid cells in humans that code visual space, subjects were instructed to perform a visual search task (“search for a T among Ls”) in the MRI scanner.

(B) Voxels in entorhinal cortex exhibited a 6-fold modulation as a function of gaze direction. This 6-fold modulation is a signature of grid cells.

(C) Percent signal change as a function of gaze-movement direction in a 2-mm sphere centered on peak EC voxel. fMRI response was higher when gaze-movement direction was aligned with the putative grid axes.

(D) To understand the role of boundaries in controlling the grid orientation, we ran subjects in a new experiment with a rectangular search display. The search display could either be upright, or tilted 30 degrees.

(E) When the display tilts 30 degrees, the grid signal also rotates 30 degrees. This indicates that the grid is anchored to the boundaries of the search display. Similar effects of boundary-anchoring have been observed in grid cells in rats.

Data: Julian, J.B., Keinath, A. T., Frazzetta, G., & Epstein, R. A. (2017). Nature Neuroscience, 21(2): 191-194.